This fuck of a problem cost me more than 4 hours to solve. I have to note this down somewhere to take the edge off..

If you are using Linux (ubuntu in my case)

If your mapreduce is picking up input data that is not within your input folder

If you delete the content of your input folder and mapreduce still reads "ghost data"*

If you have renamed your input folder and the problem still continues

If you have deleted hadoop temp directories and the problem still continues

If you have rebooted your machine and emptied your trash and the problem still continues

FFS make sure that the input directory is really empty! Linux creates temp files that end with "~". These will not show up when you check the directory size, the directory content (because it is not visible by default) and will not show up with the ls command (because it is not visible by default).

:|

*Ghost Data: Data that is not visible and seems not to be there.

Mittwoch, 19. Dezember 2012

Donnerstag, 13. Dezember 2012

XML Writable (Serializing) and the InstantiationException

If you want to serialize (encode) non-bean objects in java, you first have to prepare readFields() and writeFields() methods for your it so java has an idea on how the data in the object is meant to be stored and re-created. Shortly, you need to make your object Writable in hadoop terms.

Exception:

SITUATION BEFORE:

Object class (this is the class of the object you would like to serialize):

Call from main (encode and print out);

AFTER:

The call in main is the same..

It is very important that the order of variables in read and write are the same. If you put variable1 in the first place of writeFields(), it needs to be on the first place on readFields() too so it can be put back correctly. Also your class MUST have a no-args constructor.

Exception:

java.lang.InstantiationException:

(CLASS NAME YOU ARE TRYING TO SERIALIZE)

Continuing ...

java.lang.Exception: XMLEncoder: discarding statement ArrayList.add(MyObject);

Continuing ...

java.lang.InstantiationException:

(CLASS NAME YOU ARE TRYING TO SERIALIZE)

Continuing ...

java.lang.Exception: XMLEncoder: discarding statement ArrayList.add(MyObject);

Continuing ...

SITUATION BEFORE:

Object class (this is the class of the object you would like to serialize):

public class MyObject {

[VARIABLES]

[CONSTRUCTOR(S)]

[GETTERS&SETTERS]

}

Call from main (encode and print out);

main(String[] args) {

ByteArrayOutputStream buffer = new ByteArrayOutputStream();

XML encoder = new XMLEncoder(buffer);

encoder.writeObject(javaBean);

encoder.close();

System.out.println(buffer.toString());

}

AFTER:

public class MyObject implements Writable {

[VARIABLES]

[CONSTRUCTORS]

[GETTERS&SETTERS]

@Override

writefields() throws IOException {

WritableUtils.writeString(out, variable1);

WritableUtils.writeString(out, variable2);

WritableUtils.writeString(out, variable3);

}

@Override

readFields() throws IOException {

variable1 = WritableUtils.readString(in);

variable2 = WritableUtils.readString(in);

variable3 = WritableUtils.readString(in);

}

}

The call in main is the same..

It is very important that the order of variables in read and write are the same. If you put variable1 in the first place of writeFields(), it needs to be on the first place on readFields() too so it can be put back correctly. Also your class MUST have a no-args constructor.

Montag, 10. Dezember 2012

Solution: “utility classes should not have a public or default constructor”

“utility classes should not have a public or default constructor”

If a class is static, you probably want to use its methods "directly" rather than instantiating it first. These kind of classes are more like tools/utilities than being meant for objects. Checkstyle warns you in this case that this class can still be instantiated. A little bit annoying for my liking ;)

Solution:

make the class final:

and create an empty private constructor:

that should do the trick!

If a class is static, you probably want to use its methods "directly" rather than instantiating it first. These kind of classes are more like tools/utilities than being meant for objects. Checkstyle warns you in this case that this class can still be instantiated. A little bit annoying for my liking ;)

Solution:

make the class final:

public final class myclass {

...

}

and create an empty private constructor:

private myclass() {

}

that should do the trick!

Montag, 3. Dezember 2012

NPE: org.apache.hadoop.io.serializer.SerializationFactory.getSerializer(SerializationFactory.java:73) (SOLVED)

My NullPointerException looked something like this:

java.lang.NullPointerExceptionAfter a few google searches I found the solution. Turns out that you need to add the serialization lib's to the Job configuration manually.. So my conf and the setup looks like this:

at org.apache.hadoop.io.serializer.SerializationFactory.getSerializer(SerializationFactory.java:73)

at org.apache.hadoop.io.SequenceFile$Writer.init(SequenceFile.java:959)

at org.apache.hadoop.io.SequenceFile$Writer.<init>(SequenceFile.java:892)

at org.apache.hadoop.io.SequenceFile.createWriter(SequenceFile.java:393)

at org.apache.hadoop.io.SequenceFile.createWriter(SequenceFile.java:354)

at org.apache.hadoop.io.SequenceFile.createWriter(SequenceFile.java:476)

at org.apache.hadoop.mapreduce.lib.output.SequenceFileOutputFormat.getRecordWriter(SequenceFileOutputFormat.java:61)

at org.apache.hadoop.mapred.ReduceTask$NewTrackingRecordWriter.<init>(ReduceTask.java:569)

at org.apache.hadoop.mapred.ReduceTask.runNewReducer(ReduceTask.java:638)

at org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:417)

at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:260)

Configuration conf = new Configuration();

conf.set("io.serializations","org.apache.hadoop.io.serializer.JavaSerialization,"

+ "org.apache.hadoop.io.serializer.WritableSerialization");

I cannot Understand chinese, but good thing that Programming code is (mostly) universal! :D

Link to the original thread: Link

Donnerstag, 29. November 2012

Download link: Hadoop 1.0.3 sources

Hadoop 1.0.3 Sources. Why it is not available on its website? I do not

know..

Maven could not help me out on downloading sources either :/

Anyway, here it is:

http://rapidshare.com/files/2237720743/hadoop-core-1.0.3-sources.jar

enjoy! :)

Maven could not help me out on downloading sources either :/

Anyway, here it is:

http://rapidshare.com/files/2237720743/hadoop-core-1.0.3-sources.jar

enjoy! :)

Mittwoch, 21. November 2012

Java - Continue process after an Exception

Easier than I thought actually..

Execution breaks are caused when the exception is unhandled.. So in case you have a "malformedurlexception", you need to catch that one so your application knows what to do and how to continue in that case..

Surround your lines where the exception may occur with a

(I'm still new to this stuff.. feel free to correct me)

Execution breaks are caused when the exception is unhandled.. So in case you have a "malformedurlexception", you need to catch that one so your application knows what to do and how to continue in that case..

Surround your lines where the exception may occur with a

try {and catch the possible exception with a

}

catch (malformedurlexception e) {Basically, just handle your exceptions to continue your process :)

}

(I'm still new to this stuff.. feel free to correct me)

Montag, 19. November 2012

Create Sony SenseMe playlists for your Music collection on PC

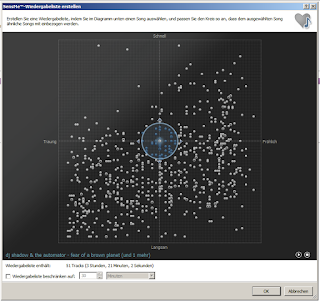

The free Software Media Go from Sony can actually do the same thing with your music collection on PC. All you need is to import your songs to its library and let the program do its work (it took about 4 hours for my 33Gig of data so far.. Still on work). After its finished, you can create SenseMe playlists by marking an area over the "mood scale" lets say.. The directions are called Fast, Slow, Sad, Happy. As you can see from my diagram, I quite dig sad slow songs :D

My explanation is terrible once again. But you get the idea! (I hope :) )

Mittwoch, 14. November 2012

Push and Pull files within Hadoop distributed cache

Push

Within run() (the place where you set up your mapper and reducer classes):

DistributedCache.addCacheFile(new URI("file:///home/raymond/file.txt"), conf);

conf is the Configuration I set up with:

Configuration conf = new Configuration();

Use file:// for local filesystem and hdfs:// for targeting the hdfs filesystem.

Pull

Now you need to know where the framework has cached your file.. Within mapper (or reducer) class you can now say:

Path[] localFiles = DistributedCache.getLocalCacheFiles(context.getConfiguration());

localFiles now contains paths of all cached files. If you have used only one file, you can access it by saying:

String Path = localFiles[0].toString();

Now you know where its located. You can pass it to a filereader to read out the contents.

As usual I'm trying to keep things as simple as possible.. Let me know if I definitely should mention something else about this process :)

Within run() (the place where you set up your mapper and reducer classes):

DistributedCache.addCacheFile(new URI("file:///home/raymond/file.txt"), conf);

conf is the Configuration I set up with:

Configuration conf = new Configuration();

Use file:// for local filesystem and hdfs:// for targeting the hdfs filesystem.

Pull

Now you need to know where the framework has cached your file.. Within mapper (or reducer) class you can now say:

Path[] localFiles = DistributedCache.getLocalCacheFiles(context.getConfiguration());

localFiles now contains paths of all cached files. If you have used only one file, you can access it by saying:

String Path = localFiles[0].toString();

Now you know where its located. You can pass it to a filereader to read out the contents.

As usual I'm trying to keep things as simple as possible.. Let me know if I definitely should mention something else about this process :)

Hadoop - Mapreduce - java.lang.NoClassDefFoundError:

I am working with Eclipse. After adding a file to the Hadoop distributed cache (found the howto on this LINK) I received the error message:

INFO: Cached file:///home/aatmaca/git/common/rules.txt as /tmp/hadoop-root/mapred/local/archive/-5239344160850859454_-776894720_2117948037/file/home/git/common/rules.txtI was sure that this is not a hadoop specific problem. A simple import of org.apache.commons.io.FileUtils didnt do the trick ( yes I'm still new to this Java stuff :) ), so I had to ask my Coding mentor for help..

14.11.2012 11:59:08 org.apache.hadoop.mapred.JobClient$2 run

INFO: Cleaning up the staging area file:/tmp/hadoop-root/mapred/staging/root-542082906/.staging/job_local_0001

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/commons/io/FileUtils

at org.apache.hadoop.fs.FileUtil.getDU(FileUtil.java:456)

at org.apache.hadoop.filecache.TrackerDistributedCacheManager.downloadCacheObject(TrackerDistributedCacheManager.java:452)

at org.apache.hadoop.filecache.TrackerDistributedCacheManager.localizePublicCacheObject(TrackerDistributedCacheManager.java:464)

Solution 1:

Download the latest commons-io JAR from:

http://mvnrepository.com/artifact/commons-io/commons-io/

Within Eclipse:

Right click on project -> Properties -> Build Path -> Add External JARs..

and import your JAR file. It should appear now within your "Referenced Libraries" folder.

Now you should be able to execute the application without error messages.

Solution 2:

If it is a Maven project you are working on, just edit your .pom file and add the common-io dependency to it:

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.4</version>

</dependency>

Sources will be downloaded automatically and added to your Maven dependencies..

Good luck!

Donnerstag, 8. November 2012

Borderlands 2 sucks!

This game fucking sucks! And I really really tried..

I don't remember why, but I really loved the first episode. The missions were kind of creative and there was some kind of story development going on that you could follow.

I got really pissed of how simple and boring the missions got in the second game! Trying to find a home for a dog, collecting medicine for brain-deseased people, collecting organs for zed, collecting tapes for tannis, collecting flowers and magazines for that fucking catch a ride redneck! really?? These are the best ideas you could come up with? Running around and collecting stuff? I am uninstalling this game after giving it a try for more than three weeks. I am almost done with the story but I just cannot care anymore. It is getting more and more boring after every mission. I cannot care anymore what the vault is all about and what happens if you find the source of iridium!! Fuck you people!

But I have to say, moxxi is one horny, busty, dirty whore!

I don't remember why, but I really loved the first episode. The missions were kind of creative and there was some kind of story development going on that you could follow.

I got really pissed of how simple and boring the missions got in the second game! Trying to find a home for a dog, collecting medicine for brain-deseased people, collecting organs for zed, collecting tapes for tannis, collecting flowers and magazines for that fucking catch a ride redneck! really?? These are the best ideas you could come up with? Running around and collecting stuff? I am uninstalling this game after giving it a try for more than three weeks. I am almost done with the story but I just cannot care anymore. It is getting more and more boring after every mission. I cannot care anymore what the vault is all about and what happens if you find the source of iridium!! Fuck you people!

But I have to say, moxxi is one horny, busty, dirty whore!

Some useful Linux shell commands

Dont judge, this piles evolution has just started! ;)

Splitting a file into several parts (based on filesize)

split -b 200M BigFile.txtThis will split the file into 200Mb pieces. Use M for Megabyte, k for Kilobyte and b for byte. Check out manual for more options.

Freitag, 5. Oktober 2012

Things you should always keep ready at your workplace

- A pair of clean black socks

- A clean white shirt

- Umbrella

- A Backup key to your flat

- 20€ "just in case" money

- Handtowel

- Hairgel/Wax (if you use it)

- Deodorant

Samstag, 29. September 2012

Fonic Internet Einstellungen

- Auf folgenden Link klicken und die Zugriffseinstellungen zusenden lassen indem man die Handy Rufnummer eingibt: http://www.fonic.de/html/handy-einstellungen.html

- Ca. eine Minute später erhält man die Einstellungen per SMS. Die Änderungen auf dem Handy akzeptieren bzw. installieren.

- Handy ausschalten und wieder einschalten

Name: Fonic Web conn

APN: pinternet.interkom.de

Proxy: nicht definiert

Port: nicht definiert

Benutzername: nicht definiert

Kennwort: nicht definiert

Server: nicht definiert

MMSC: nicht definiert

MMS-Proxy: nicht definiert

MMS-Port: nicht definiert

MCC: 262

Authentifizierungstyp: Keine

APN-Typ: default.supl

APN-Protokoll: IPv4

Träger: keine Angabe

Donnerstag, 15. März 2012

Setting up your own remote server (VNC & DYNDNS)

1-Static IP assignment

Your server needs to have the same local IP every time so the router can access it. In my case, I assigned the server to the IP 192.168.1.120. Gateway address is my Routers IP: 192.168.1.1 and I used Google's DNS servers: 8.8.8.8 and 8.8.4.4

2-Install RealVNC

Install RealVNC to be able to access your server using VNC remotely. I recommend installing the service mode so that the program starts with windows automatically. RealVNC will use the standard VNC port for incoming connections. I wasn't comfortable, so I changed it to a random number of my own (lets call it 2012 for this tutorial).

3-Open and forward a router port

Go to your routers configuration page (usually something like 192.168.1.0 or 0.1 or 1.1 or 0.0), forward the port number 2012 to your servers IP address 192.168.1.120 so the router will forward requests at this port to your server. Its like saying "if anyone knocks on door nr. 2012, send the visitor to me" :)

4-Dynamic DNS hostname

Register a hostname at no-ip.com. For this example, lets call it homeserver.no-ip.org and install the noIP update client on your server. Make sure the program is always running in background (I'm skipping the basic configuration details of the program at this point).

5-Firewall rule

Your firewall, in my case it was the default windows firewall, is probably blocking our port 2012 which needs to be open for incoming traffic. Go to the program settings of the firewall and set up an exception for port 2012 for both incoming and outgoing traffic (possibly only incoming is necessary, I didn't try it yet :) ).

Now we can test it! Open a VNC client on another computer (RealVNC viewer for instance) and type in: homeserver.no-ip.org:2012. If you set up a password at the RealVNC configuration it should ask for it now. RealVNC appearantly also supports a Java viewer so you'd be able to connect to your server using a webbrowser. If you've installed the free version of RealVNC, the port should be the port you've set up -100 (so in our case its 1912). Type in homeserver.no-ip.org:1912 (Java needs to be installed on the server for this).

Not working?:

-If you see the login page of your router, the port forwarding or the static IP assignment wasnt done correctly. Check which IP address your server has and which one has been entered at the port forwarding on your router.

-Maybe you didn't set up your firewall correctly? Turn your firewall off temporarily to find it out.

Donnerstag, 8. März 2012

If condition with dependance on previous command ( $? )

Say youre writing a bash script and have an if condition in it that depends on whether or not the previous condition was "successful".

Example:

sleep 10; if (( $? )); then echo "sleep interrupted!";fi

now if you cancel the sleep command with ctrl+c the string "string interrupted!" will show.. Of course this example is not supposed to be exciting at all, but think of the possibilities! You dont necessarily have to work with true or false variables, you can just act depending on the previous command's state.

Example:

sleep 10; if (( $? )); then echo "sleep interrupted!";fi

now if you cancel the sleep command with ctrl+c the string "string interrupted!" will show.. Of course this example is not supposed to be exciting at all, but think of the possibilities! You dont necessarily have to work with true or false variables, you can just act depending on the previous command's state.

Freitag, 2. März 2012

LG Flatron W2442PE driver

LG Flatron W2442PE driver

Here's the link for the driver:

http://i.minus.com/1334308662/KSAPufzULnjNIcLsAd6FlQ/dbbeciXFclZvzq.zip

http://www.uploadstation.com/file/RHGC25b/w2442pe.zip

Edit: One link dies, two more pop up ;) 12.04.12

Edit2: These links died also.. I'll try to post new ones asap.

Here's the link for the driver:

http://i.minus.com/1334308662/KSAPufzULnjNIcLsAd6FlQ/dbbeciXFclZvzq.zip

http://www.uploadstation.com/file/RHGC25b/w2442pe.zip

Edit: One link dies, two more pop up ;) 12.04.12

Edit2: These links died also.. I'll try to post new ones asap.

Freitag, 24. Februar 2012

"Journalists Marie Colvin and Remi Ochlik die in Homs"

American journalist Marie Colvin and French journalist Remi Ochlik have been killed during random artillery strikes in Homs Syria on 22. February 2012. I did not know this people, but even their death didn't bring up enough impact for the world to actually care..

They are going to watch until the Assad goverment is tired and out of ammo I guess. No body seems to give a fuck at the moment!

Sonntag, 19. Februar 2012

Mittwoch, 8. Februar 2012

Solution for fake redirection of Porn galleries (FF addon)

Well here is a perfectly working Firefox addon just to clean out those redirections (if the original link is within the URL). Its working great!

https://addons.mozilla.org/de/firefox/addon/redirect-remover

Abonnieren

Posts (Atom)