Hadoop 1.0.3 Sources. Why it is not available on its website? I do not

know..

Maven could not help me out on downloading sources either :/

Anyway, here it is:

http://rapidshare.com/files/2237720743/hadoop-core-1.0.3-sources.jar

enjoy! :)

Donnerstag, 29. November 2012

Mittwoch, 21. November 2012

Java - Continue process after an Exception

Easier than I thought actually..

Execution breaks are caused when the exception is unhandled.. So in case you have a "malformedurlexception", you need to catch that one so your application knows what to do and how to continue in that case..

Surround your lines where the exception may occur with a

(I'm still new to this stuff.. feel free to correct me)

Execution breaks are caused when the exception is unhandled.. So in case you have a "malformedurlexception", you need to catch that one so your application knows what to do and how to continue in that case..

Surround your lines where the exception may occur with a

try {and catch the possible exception with a

}

catch (malformedurlexception e) {Basically, just handle your exceptions to continue your process :)

}

(I'm still new to this stuff.. feel free to correct me)

Montag, 19. November 2012

Create Sony SenseMe playlists for your Music collection on PC

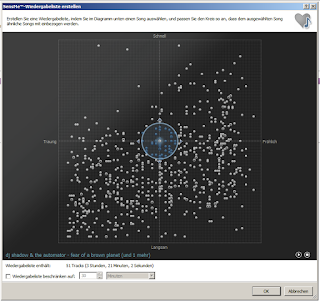

The free Software Media Go from Sony can actually do the same thing with your music collection on PC. All you need is to import your songs to its library and let the program do its work (it took about 4 hours for my 33Gig of data so far.. Still on work). After its finished, you can create SenseMe playlists by marking an area over the "mood scale" lets say.. The directions are called Fast, Slow, Sad, Happy. As you can see from my diagram, I quite dig sad slow songs :D

My explanation is terrible once again. But you get the idea! (I hope :) )

Mittwoch, 14. November 2012

Push and Pull files within Hadoop distributed cache

Push

Within run() (the place where you set up your mapper and reducer classes):

DistributedCache.addCacheFile(new URI("file:///home/raymond/file.txt"), conf);

conf is the Configuration I set up with:

Configuration conf = new Configuration();

Use file:// for local filesystem and hdfs:// for targeting the hdfs filesystem.

Pull

Now you need to know where the framework has cached your file.. Within mapper (or reducer) class you can now say:

Path[] localFiles = DistributedCache.getLocalCacheFiles(context.getConfiguration());

localFiles now contains paths of all cached files. If you have used only one file, you can access it by saying:

String Path = localFiles[0].toString();

Now you know where its located. You can pass it to a filereader to read out the contents.

As usual I'm trying to keep things as simple as possible.. Let me know if I definitely should mention something else about this process :)

Within run() (the place where you set up your mapper and reducer classes):

DistributedCache.addCacheFile(new URI("file:///home/raymond/file.txt"), conf);

conf is the Configuration I set up with:

Configuration conf = new Configuration();

Use file:// for local filesystem and hdfs:// for targeting the hdfs filesystem.

Pull

Now you need to know where the framework has cached your file.. Within mapper (or reducer) class you can now say:

Path[] localFiles = DistributedCache.getLocalCacheFiles(context.getConfiguration());

localFiles now contains paths of all cached files. If you have used only one file, you can access it by saying:

String Path = localFiles[0].toString();

Now you know where its located. You can pass it to a filereader to read out the contents.

As usual I'm trying to keep things as simple as possible.. Let me know if I definitely should mention something else about this process :)

Hadoop - Mapreduce - java.lang.NoClassDefFoundError:

I am working with Eclipse. After adding a file to the Hadoop distributed cache (found the howto on this LINK) I received the error message:

INFO: Cached file:///home/aatmaca/git/common/rules.txt as /tmp/hadoop-root/mapred/local/archive/-5239344160850859454_-776894720_2117948037/file/home/git/common/rules.txtI was sure that this is not a hadoop specific problem. A simple import of org.apache.commons.io.FileUtils didnt do the trick ( yes I'm still new to this Java stuff :) ), so I had to ask my Coding mentor for help..

14.11.2012 11:59:08 org.apache.hadoop.mapred.JobClient$2 run

INFO: Cleaning up the staging area file:/tmp/hadoop-root/mapred/staging/root-542082906/.staging/job_local_0001

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/commons/io/FileUtils

at org.apache.hadoop.fs.FileUtil.getDU(FileUtil.java:456)

at org.apache.hadoop.filecache.TrackerDistributedCacheManager.downloadCacheObject(TrackerDistributedCacheManager.java:452)

at org.apache.hadoop.filecache.TrackerDistributedCacheManager.localizePublicCacheObject(TrackerDistributedCacheManager.java:464)

Solution 1:

Download the latest commons-io JAR from:

http://mvnrepository.com/artifact/commons-io/commons-io/

Within Eclipse:

Right click on project -> Properties -> Build Path -> Add External JARs..

and import your JAR file. It should appear now within your "Referenced Libraries" folder.

Now you should be able to execute the application without error messages.

Solution 2:

If it is a Maven project you are working on, just edit your .pom file and add the common-io dependency to it:

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.4</version>

</dependency>

Sources will be downloaded automatically and added to your Maven dependencies..

Good luck!

Donnerstag, 8. November 2012

Borderlands 2 sucks!

This game fucking sucks! And I really really tried..

I don't remember why, but I really loved the first episode. The missions were kind of creative and there was some kind of story development going on that you could follow.

I got really pissed of how simple and boring the missions got in the second game! Trying to find a home for a dog, collecting medicine for brain-deseased people, collecting organs for zed, collecting tapes for tannis, collecting flowers and magazines for that fucking catch a ride redneck! really?? These are the best ideas you could come up with? Running around and collecting stuff? I am uninstalling this game after giving it a try for more than three weeks. I am almost done with the story but I just cannot care anymore. It is getting more and more boring after every mission. I cannot care anymore what the vault is all about and what happens if you find the source of iridium!! Fuck you people!

But I have to say, moxxi is one horny, busty, dirty whore!

I don't remember why, but I really loved the first episode. The missions were kind of creative and there was some kind of story development going on that you could follow.

I got really pissed of how simple and boring the missions got in the second game! Trying to find a home for a dog, collecting medicine for brain-deseased people, collecting organs for zed, collecting tapes for tannis, collecting flowers and magazines for that fucking catch a ride redneck! really?? These are the best ideas you could come up with? Running around and collecting stuff? I am uninstalling this game after giving it a try for more than three weeks. I am almost done with the story but I just cannot care anymore. It is getting more and more boring after every mission. I cannot care anymore what the vault is all about and what happens if you find the source of iridium!! Fuck you people!

But I have to say, moxxi is one horny, busty, dirty whore!

Some useful Linux shell commands

Dont judge, this piles evolution has just started! ;)

Splitting a file into several parts (based on filesize)

split -b 200M BigFile.txtThis will split the file into 200Mb pieces. Use M for Megabyte, k for Kilobyte and b for byte. Check out manual for more options.

Abonnieren

Posts (Atom)